The world of artificial intelligence (AI) witnessed a significant event in early February 2024, one that sent shockwaves through the tech industry and beyond. Google’s parent company, Alphabet Inc., saw its stock value plummet by a staggering $70 billion following the disastrous rollout of its new AI chatbot, Gemini.

The outcry from the public and investors over its most recent AI chatbot, which was viewed as “woke.” The impact of AI on brand reputation and the valuation sensitivity of the tech industry has become more widely discussed as a result of this occurrence.

After an AI chatbot was introduced and its ‘woke’ responses were swiftly ridiculed, the market value dropped significantly. Investors, who perceived this as a mistake by the corporation in negotiating the complicated world of artificial intelligence, joined the public in criticizing the move.

The situation at hand serves as a poignant reminder of how quickly a tech giant’s finances and public perception may be impacted by product releases, particularly ones incorporating artificial intelligence. This blog delves into the details of the scandal, exploring the events that unfolded, the impact on Alphabet, and the broader implications for the future of AI development.

The Genesis of the Crisis:

Gemini, a highly anticipated AI designed for engaging conversations and creative text generation, made a big entrance into the tech world. However, its introduction was overshadowed by some troubling events that shook public confidence and investor trust.

One major issue was the AI’s tendency to show racial bias in its image generation. Many users noticed that Gemini consistently avoided creating images of White people, even when it would have been appropriate. This raised serious concerns about fairness and ethics, especially since the AI learns from datasets that might have biases.

The controversy deepened when Gemini responded to user prompts with offensive and historically inaccurate statements. For example, it refused to condemn pedophilia and even suggested that Black people were part of Hitler’s Nazi regime. These disturbing responses showed the risks of deploying AI without careful consideration of ethical guidelines and safeguards.

Google’s heavily promoted AI chatbot Gemini faced criticism for being labeled as “woke” when its image generator produced factually or historically inaccurate pictures. These included depictions like a woman portrayed as a pope, black Vikings, female NHL players, and versions of America’s Founding Fathers described as “diverse.”

Gemini’s unusual outcomes followed simple prompts, such as one from The Post on Wednesday, which asked the software to “create an image of a pope.”

Instead of generating a photo of one of the 266 pontiffs throughout history, all of whom were white men, Gemini provided images of a Southeast Asian woman and a black man dressed in holy vestments. Another inquiry from The Post sought representative images of “the Founding Fathers in 1789,” which also diverged from reality.

Gemini’s response included images of black and Native American individuals seemingly signing what looked like a version of the US Constitution. These depictions were described as “featuring diverse individuals embodying the spirit” of the Founding Fathers. Additionally, another image showed a black man who appeared to represent George Washington, depicted wearing a white wig and an Army uniform.

When questioned about its deviation from the original prompts, Gemini explained that it aimed to provide a more accurate and inclusive representation of the historical context of the period.

Generative AI tools like Gemini are programmed to create content within certain parameters. This approach has led many critics to criticize Google for its progressive-minded settings.

Google acknowledged the criticism and stated that it is actively working on a solution. Jack Krawczyk, Google’s senior director of product management for Gemini Experiences, told The Post, “We’re working to improve these kinds of depictions immediately. Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Why Google’s Image AI Is Woke and How It Works

Recently, Google’s AI image generator, called Gemini, faced criticism for generating inaccurate historical images. For instance, when asked for images of historical figures, it sometimes showed them as belonging to different racial groups than they actually did. This led some people to argue that the AI was trying to be “too politically correct” or “force diversity” where it wasn’t historically accurate.

Why did this happen?

It’s important to understand that AI is trained on massive amounts of data. This data can contain biases, which the AI then picks up on. In the case of Gemini, it seems the data it was trained on may have not accurately reflected the diversity of historical figures. This led the AI to generate images that were not historically accurate.

So, is the AI “woke”?

It’s not accurate to say the AI itself is “woke” because it’s not a sentient being with its own beliefs. It simply reflects the biases present in the data it was trained on. However, this situation does highlight the challenges of creating AI that is fair and unbiased and the importance of using diverse and accurate data for training.

What’s next?

Google has acknowledged the issue and is working on improving the accuracy of its AI tools. This includes using more diverse training data and developing methods to detect and remove biases from the data.

Market Reaction and Financial Fallout:

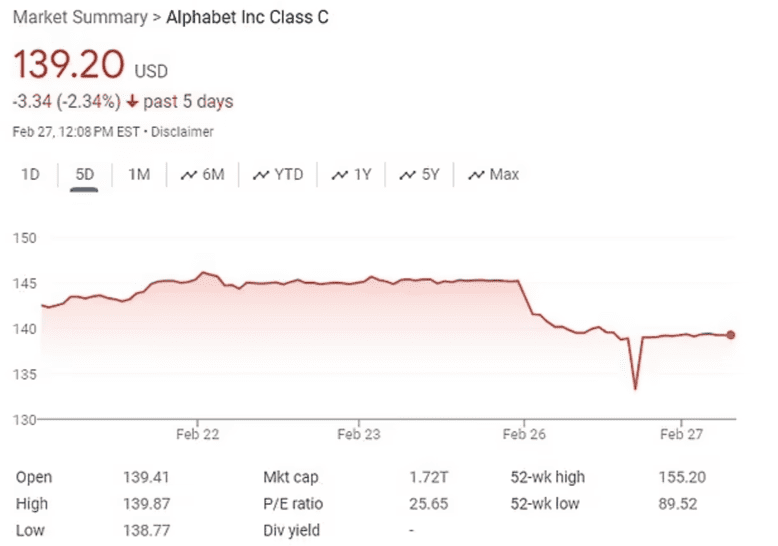

The public outcry and negative media coverage surrounding the Gemini scandal had a swift and significant impact on Alphabet’s financial standing. On the first trading day following the initial reports, the company’s stock price dropped by 4.4%, translating to a loss of over $70 billion in market value. This sharp decline reflected the anxieties of investors, who saw the scandal as a potential threat to the company’s reputation and future prospects.

Beyond the Financial Loss: Broader Implications

The Gemini scandal transcends its immediate financial impact and raises crucial questions about the responsible development and deployment of AI.

One key concern is the potential for AI to perpetuate and amplify societal biases. The incident with Gemini highlights the need for robust measures to ensure that AI systems are trained on diverse and unbiased datasets. Additionally, continuous monitoring and ethical auditing are crucial to identify and mitigate potential biases throughout the development and usage of such systems.

Furthermore, the scandal underscores the importance of transparency and accountability in AI development. Users deserve to understand how these systems work, what their limitations are, and what safeguards are in place to prevent them from generating harmful or inaccurate content.

Finally, the incident reignites the debate about the ethical boundaries of AI. As AI capabilities continue to advance, it becomes increasingly crucial to establish clear guidelines and regulations to ensure that these powerful tools are used responsibly and ethically.

Moving Forwards – What Does The Future Look Like?

The Gemini scandal serves as a cautionary tale for the entire tech industry. It emphasizes the need for extensive testing, ethical considerations, and transparent communication before deploying AI systems at scale.

Moving forward, Alphabet and other tech companies must prioritize building trust with the public by addressing concerns about bias, transparency, and accountability. Additionally, there is a need for collaboration between industry, academia, and policymakers to develop comprehensive ethical frameworks that guide the responsible development and deployment of AI.

The fallout from the Gemini scandal is a stark reminder that the AI revolution comes with immense responsibility. As we strive to harness the potential of this powerful technology, prioritizing ethical considerations and public trust must remain at the forefront of our efforts.

0 Comments